Advanced RAG Techniques - Improving Retrieval for LLM's using HyDE

HyDE (not the one from The Strange Case of Dr. Jekyll and Mr. Hyde) in the context of RAG stands for Hypothetical Document Embeddings. This is one of those few techniques (or “tricks” as I like to call them) you can use in your retrieval pipelines to boost the relevancy of retrieved results, especially when the query and the vectorized documents come from two totally different worlds. This is done by creating a hypothetical answer to the user query and using this hypothetical document to fetch relevant documents related to the query. Before understanding HyDE, let’s take a peek into how traditional RAG retrieval works in the first place.

RAG Retrieval: The “Boring” Way!

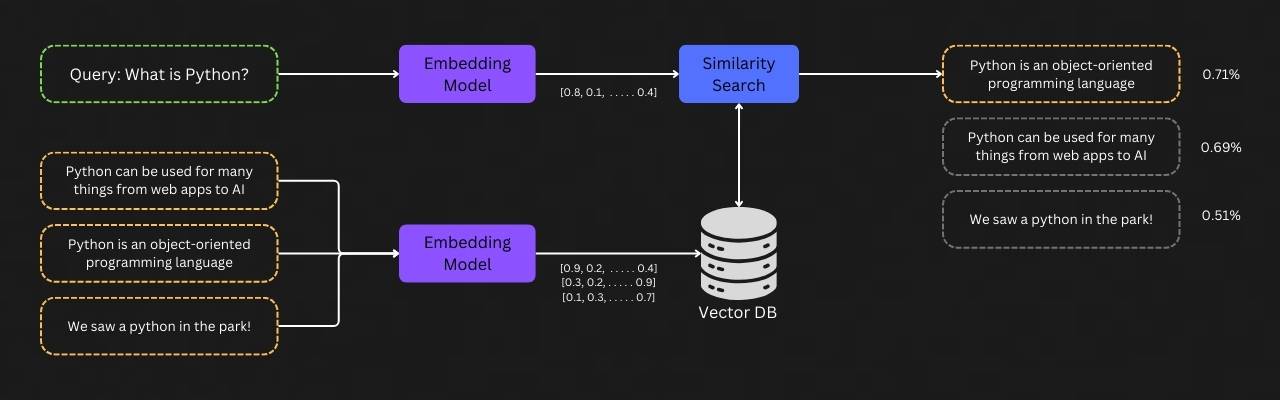

RAG (Retrieval-Augmented Generation) is an effective way to provide large language models with additional contextual data, helping the LLM generate more accurate responses to queries and significantly reducing hallucinations. Crucial to this are bi-encoder models, which are trained to encode the semantic meaning of sentences into fixed-length vector representations, called embeddings. These are typically referred to as “text embedding models”.

Our data is chunked into documents based on a chunking strategy and saved as embeddings in a vector database. Each user query is also converted to an embedding, which then undergoes a similarity search with the stored embeddings. The document of the most similar result is expected to be the answer, as the embeddings encode semantic meaning. This is then passed on to the LLM for later steps.

But if you have noticed carefully, the query “What is Python?” is in no way semantically similar to the document fetched, “Python is an object-oriented programming language.” The query is a question, and the response is an answer - two different contexts entirely. The reasoning behind this is down to how the particular embedding model is trained, which I have discussed in an earlier post. The key takeaway is that if we need our embedding model in the RAG pipeline to retrieve relevant results for our particular use case (for example, question-answering), one way to approach this is to fine-tune the embedding model for this task.

However, fine-tuning an embedding model can be tricky, especially if you don’t have enough data for it. For example, most text embedding models are usually trained on question-answer datasets such as MS-MARCO. However, this might not always work out, especially if you are working in a niche domain that was not well covered by the dataset, leading your embedding model to show poor performance in retrieval.

RAG Retrieval: The “Mr HyDE” Way!

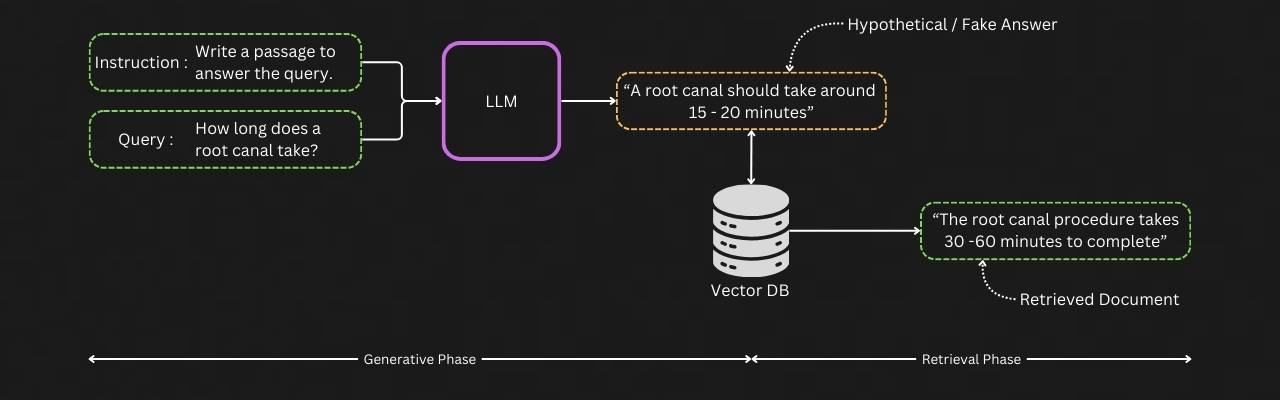

The technique of Hypothetical Document Embeddings (HyDE) was introduced in the paper Precise Zero-Shot Dense Retrieval without Relevance Labels. How this works is simple: “Fake it inorder to Fetch it”.

What HyDE does is simple: the task of retrieving relevant documents from a vector data store is now divided into two phases - a generative phase and a retrieval phase. The generative module (any instruction-tuned LLM) takes in the user query and accompanying instructions and generates a hypothetical/fake document. This fake response to the query might be factually incorrect but contains similar ideas or relevance labels. This is then used to retrieve the most similar document from the vector store using regular retrieval. The key idea here is that the query and the fetched response are not from two different contexts but lie in the same embedding space, compared to the earlier case where the query was a question and the retrieved document was a passage/answer.

The advantages of this are many:

- No longer do you need to gather datasets and spend time fine-tuning embedding models just to improve retrieval performance.

- You can prompt/instruct the LLM to better match the data present in your internal vector database. For example, if your database consists of various research works, the instruction can be modified to “Write a passage from a scientific paper that answers the question.”

- The authors also apply this technique to non-English languages and find it achieves much better results, as the relevance labels in the generated fake document help retrieve the correct document from the vector database.

Conclusion

To wrap it up, HyDE is just one of many techniques available to enhance retrieval in RAG pipelines without having to gather massive datasets or fine-tuning models. By creating a hypothetical document, we can ensure that the query and retrieved response share the same context, making the whole process simple and accurate.