Symmetric vs Asymmetric Semantic Search

Semantic search is one of the primary components of a retriever in most RAG systems. Regular text search retrieves text by matching similar words or phrases. However, semantic search compares the meanings of words or phrases. It accomplishes this by converting phrases into numeric vectors called embeddings. Each embedding is a numerical representation that captures the meaning of a sentence. Retrieval works by returning phrases with the closest embedding to that of a query.

Many open-source embedding models are available, each with unique capabilities. When choosing one it is important to consider the type of semantic search the model has been trained for. This choice can affect the capabilities of your RAG systems depending on the use case. It is critical to choose the right model for your intended task.

The two types of semantic searches are symmetric and asymmetric. So, how does one differentiate and select between these two types of searches?

Symmetric Semantic Search

As quoted in the sbert.net documentation page, “For symmetric semantic search, your query and the entries in your corpus are of about the same length and have the same amount of content.”

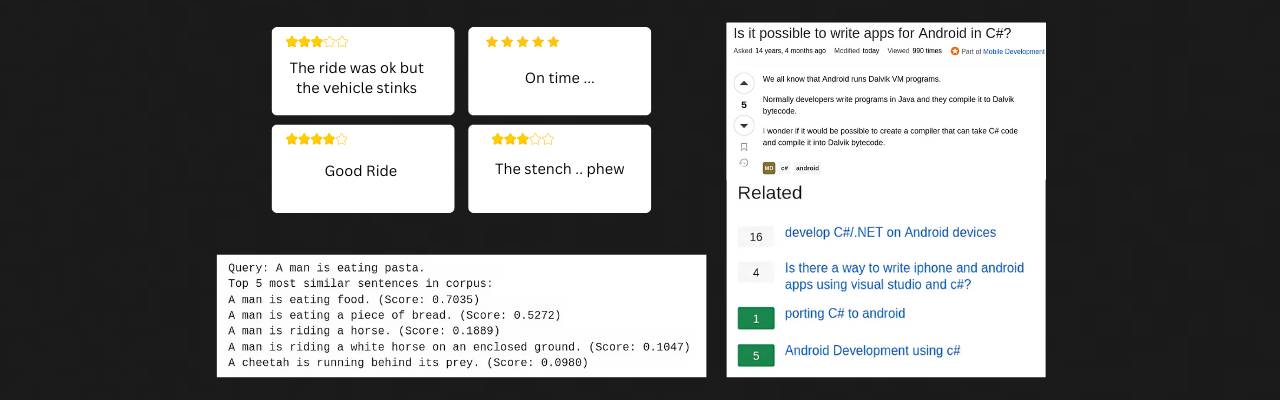

This type of search is primarily used when the goal is to find related or similar content to a given query. For example, it can be used to identify related search terms to a query. It can also help find duplicate content or find similar reviews from within a database.

In symmetric search, both the query and the retreived document share the same meaning. Even if they are to be interchanged, the meaning remains unchanged to an extent.

Asymmetric Semantic Search

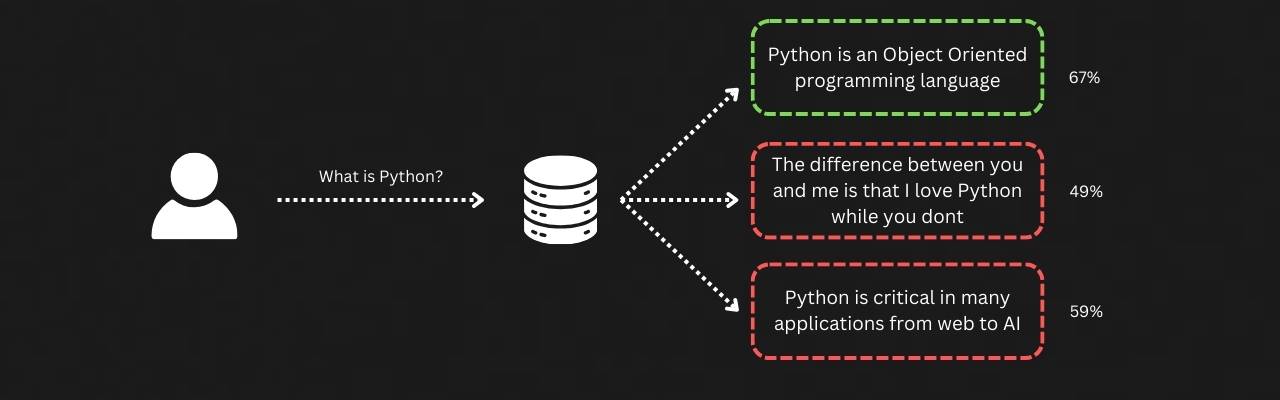

When it comes to asymmetric semantic search sbert.net describes it as, “For asymmetric semantic search, you usually have a short query (like a question or some keywords) and you want to find a longer paragraph answering the query.”

Use this primarily if both query and retrieved documents are from two different contexts. An example of this is when the input is a question, and the retrieved document is an answer to the input query. Most chat based RAG applications (think Perplexity.ai) will benefit from asymmetric search.

In this type, both the query and retrieved document need not share the same meaning.

Why Does the Choice of Model Matter?

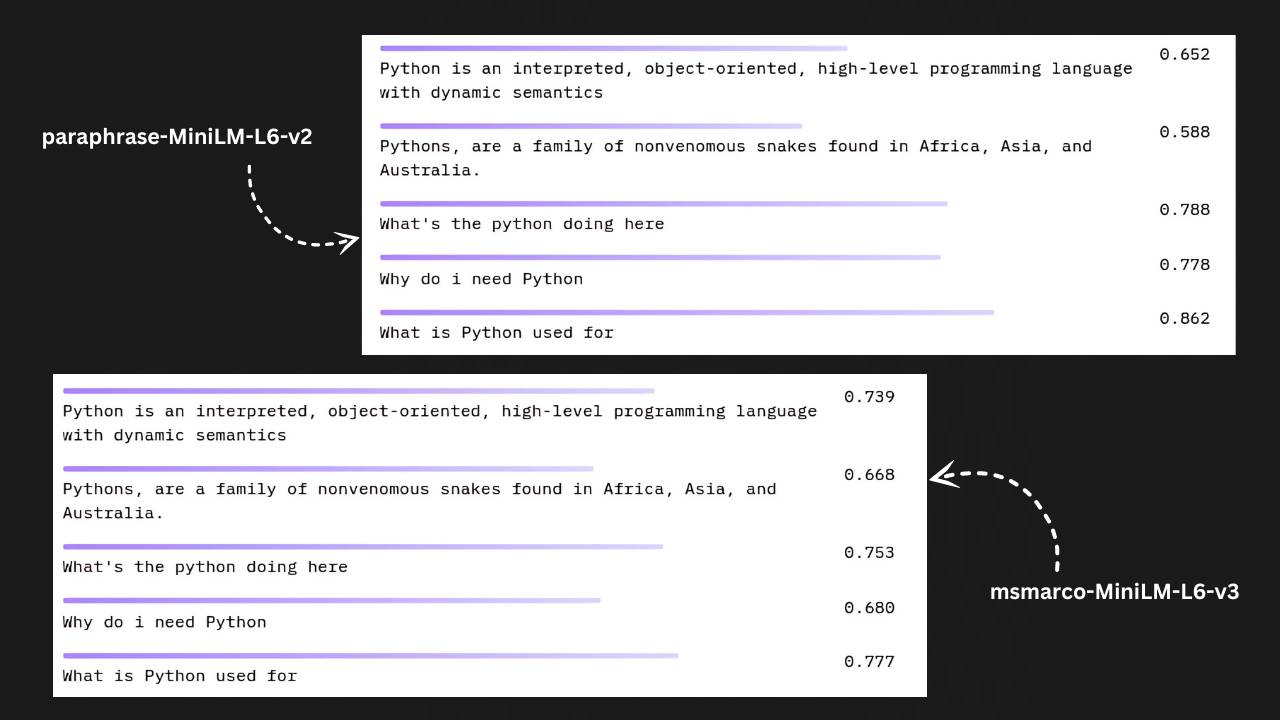

So, how much of an impact does this difference have on your semantic search applications? For comparing this, we’ll use the “paraphrase-MiniLM-L6-v2” and “msmarco-MiniLM-L6-v3” sentence-transformer models. The paraphrase model is trained for paraphrase mining, which is the task of finding similar sentences. The other model has been trained on the MS MARCO dataset released by Microsoft which is a huge corpus of question-answer pairs. Both models use the same MiniLM-L6 models with the only difference being in how training was done.

The results shown in the figure reveal a lot about the models. For the paraphrase model (symmetric search), similar questions like “What is Python used for” and “What is the Python doing here?” receive higher similarity rankings. Meanwhile, with the msmarco model (asymmetric search), similar questions still rank highest, but scores for the actual answer show a significant increase from 0.652 to 0.739. Let’s try a different example to justify this.

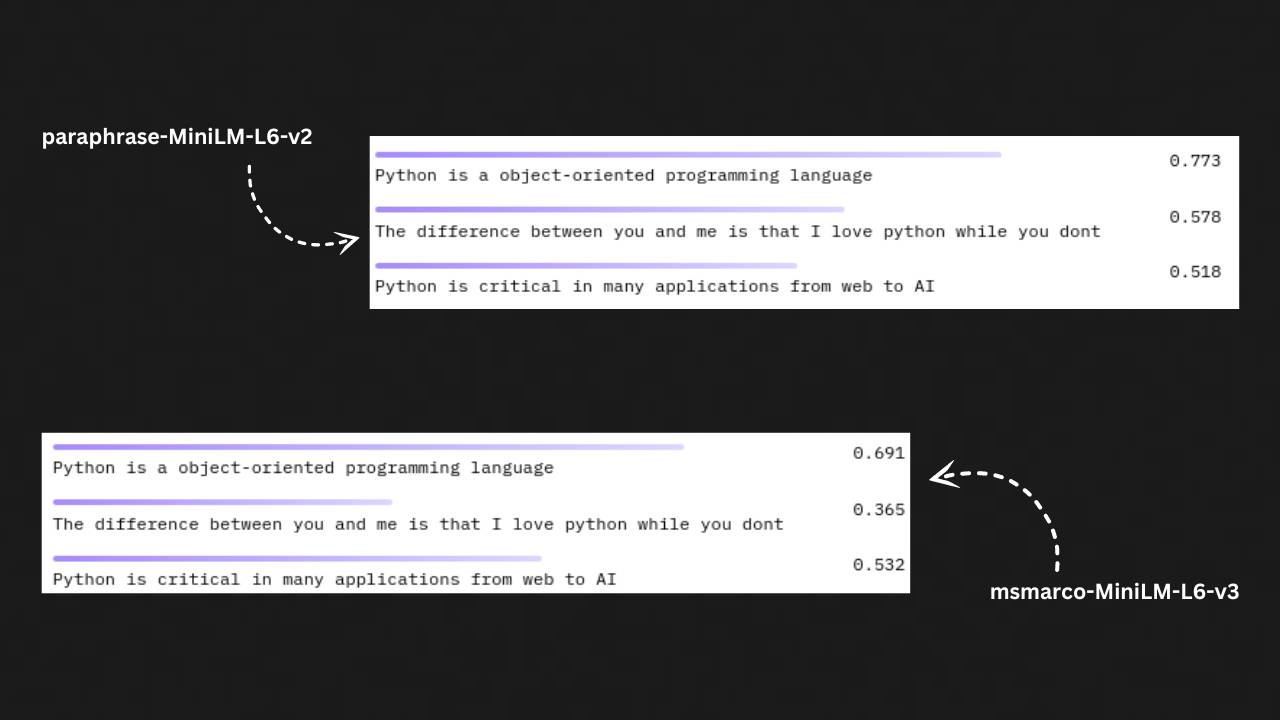

In this, the distinction between these two models is even clearer. The paraphrase model clearly struggles to rank documents and gives a higher priority for the wrong document. When we check the msmarco model’s output, the correct documents are ranked higher, with a big gap between them and the incorrect one.

If the goal was to find questions similar to the query, then symmetric search shows the best results. However, if the goal is to retrieve answers from a database, the asymmetric model is better suited for the task.

Before you “rm -rf” this page

As seen, an embedding model’s performance on a given task is influenced by its training process and the dataset used for training. It’s always good to review the model card before using an embedding model. If the dataset and training approach align with your application, you have a lower percentage of getting head-scratching moments while tinkering around with it. If you are still unable to get good results, it’s time for some fine-tuning 🛠️.